When working with WordPress or other open source content platforms that offer plugins it is common to find multiple robots tags on page. If you cannot understand why a page is set to noindex but wasn’t set by you, here is a simple way to find out if your site has multiple meta robots tags.

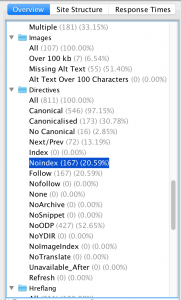

Simply fire up Screaming Frog and enter your site into the search bar. Let the scan complete and then we can browse to the Noindex view in the Directives folder.

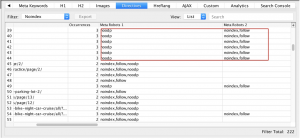

You can also browse the to this section manually by scrolling over to Directives in the main window and filter for Noindex.

What you are looking for here is to see what pages have noindex set. Be careful the pages may be set to noindex for a reason. So it’s important to have a solid understanding of noindex and why it is used.

It’s possible to have multiple robots tags on one page. Screaming Frog will list them all in the report view, ie. Meta Robots 1, Meta Robots 2, etc.

To fix the issue, start by looking at the plugins. See if there are multiple SEO plugins in use. If so, disable anything you may not use, or recently added. For example, if you have Yoast and the All-in-one SEO plugin installed but only use one, disable the other.

Run another scan and see if the extra robots tag has disappeared. Work your way through plugins and disable those not in use until you find the conflict.

It’s possible the issue is related to the theme in use and not the plugins. This may be more difficult to detect so you can either take a dive through the template files and see if anything is set in there, or try switching to a different theme temporarily to see if the issue is cleared up.